Aug 30, 2024

Understanding The Security Features in AI Productivity Tools

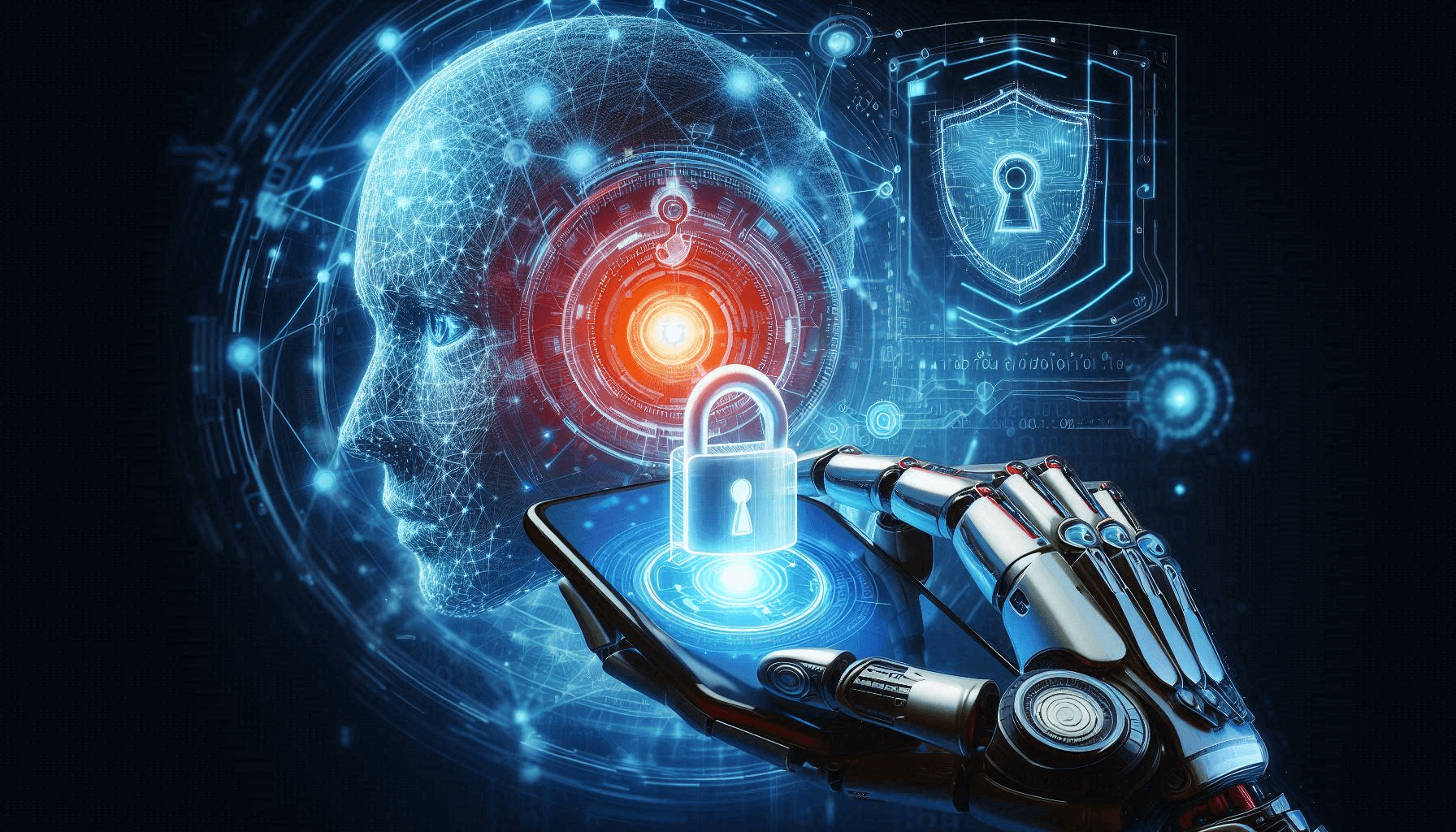

When one decides to embed AI tools into their daily workflow, security should always be of utmost concern. More recently, AI productivity tools in the form of AI assistants, AI chatbots, and AI-powered personal assistants have flooded the space. One key question needs to be asked: Where are these tools in terms of security to safeguard sensitive data?

The Importance of Security in Artificial Intelligence Tools

AI tools now range from email AI assistants that draft and manage emails for you to AI calendars that help in managing your schedule skillfully. Still, they are going to handle very sensitive information-from personal letters to sensitive business data. That is why sound security is paramount.

Leading AI productivity tools have implemented high-end security protocols, including but not limited to CASA Tier 2 verification, Google verification, and even AES 256 encryption. These protocols ensure that data coming through these productivity tools remains safe from any potential unauthorized access or hack attempt.

What is AES 256 Encryption?

AES 256-bit encryption is considered one of the strongest types of encryption available. It works as a symmetric stream cipher algorithm using a 256-bit key for data encryption and decryption purposes. This level of encryption makes it practically impenetrable, thus providing a really strong layer of security for data stored or processed by AI tools.

Consider it a digital vault in which only you possess the key. AES 256 encryption is employed by financial institutions, government agencies, and, increasingly, numerous AI productivity tools to safeguard sensitive data.

Key Security Features in AI Productivity Tools

It will involve some of the key security protocols that are needed to ensure the protection of data in AI tools, including:

1. AES-256/TLS 1.3: Encryption forms the first basis of security for your data. Many AI productivity tools use the AES-256 encryption standard, which is very secure while your data is at rest and even in motion. Once AES-256 encryption has been set up on data, there is a minimum chance that unauthorized parties will be able to access such data. In addition, any data in transit is wrapped within TLS 1.3 with AES-256 for network security.

2. CASA Tier 2 Verification: This verification level is designed to ensure improved security regarding the storage and processing of data in the cloud. If an AI assistant or AI productivity assistant says that it has CASA Tier 2 verification, then it would mean that it has met high standards of security and can reassure you about the safety of your data.

3. GDPR Compliance: Data protection laws like the GDPR set serious expectations on how companies process personal data. Tools compliant with the GDPR ensure that data processing is secure, mechanisms for exercising GDPR rights are provided, and it gives users control over their data to keep them free of worries.

4. SOC 2 Type 2 Compliance: Dataleap is currently working toward SOC 2 Type 2 compliance, a seal of approval to indicate that an organization follows strict, scrutinized security processes. The compliance will further nail its pledge to the secure handling of user data.

5. ISO 27001 Certification: Committed to continuous improvement, Dataleap intends to get ISO 27001 certification by Q4 2024. The globally accepted standard of the information security management system would further make sure of integrity for customer data, reduce risks, and ensure continuity of service. This certification underlines a deep commitment to the protection of data and will go a long way toward giving even better assurance to users.

Many of the tools are starting to support data segregation through different row-level access mechanisms. The separation of data according to workspace and organization allows only those with proper permission to access sensitive information. A general concern with AI tools is how they make use of the data fed into them.

Make use of only those models where inputted data is not used for training purposes. This kind of policy makes sure that the data of the users remains confidential and does not get repurposed for model improvements in order to safeguard privacy.

There should also be restrictive access protocols to prevent unauthorized access to sensitive data. In the application of the principle of least privilege, no more than necessary access to the data needs to be granted except to the very essential personnel. This way, one minimizes the chance of data breaches and unauthorized disclosure of sensitive information.

The Importance of Security for AI Chatbots and AI Assistants

The growing implementation of AI chatbots, chatbot assistants, and virtual AI agents in customer service and business communications has underlined the primordiality of security. Very often, technologies manage sensitive information related to customers, making it a key target for cyberattacks.

By employing AES 256 encryption, these tools guarantee that even in the event of data interception, it remains inaccessible to unauthorized individuals. Furthermore, Google/Meta verification offers additional layers of protection, thereby ensuring that the tools adhere to the highest security standards.

Ideal Approaches for Choosing Secure AI Tools

When choosing artificial intelligence tools in either business or personal applications, it's important to consider their security features. Look for those that offer protection with AES 256 encryption, SOC, GDPR, ISO, CASA Tier 2 verification that includes a check against security standards, and Google/Meta verification to ensure the tool has passed severe security checks.

Look for tools that take security seriously and will be transparent about their encryption standards and verification processes. For additional peace of mind, independent security firms' reviews or certifications can help validate their claims.

As security protocols for artificial intelligence tools improve, one should expect to see more tools using advanced forms of encryption, like AES 256, and gaining Google/Meta verification, even to higher CASA Tier verifications. Artificial intelligence is not just about boosting productivity; it's also inherently about ensuring the security of the data that fuels that productivity.

Conclusion

From AI calendar management to AI-powered personal assistants, security features are destined to play a crucial role in determining which tools will dominate the market. Users will progressively seek transparency and trust, compelling AI developers to innovate not only in functionality but also in the safeguarding of user data.

Being able to understand the security features involved with artificial intelligence productivity tools means one can make appropriate decisions that protect sensitive data while using the latest technology to enhance productivity. Whether one is employing an AI assistant for business purposes or a chatbot AI to automate customer service, being aware of the security involved with such tools becomes crucial for standing by trust and efficiency within the modern digital landscape.